Project information

- Category: Application

- Client: HackCamp 2022

- Project date: November 6, 2022

- Project URL: https://devpost.com/software/equal-writes

Details

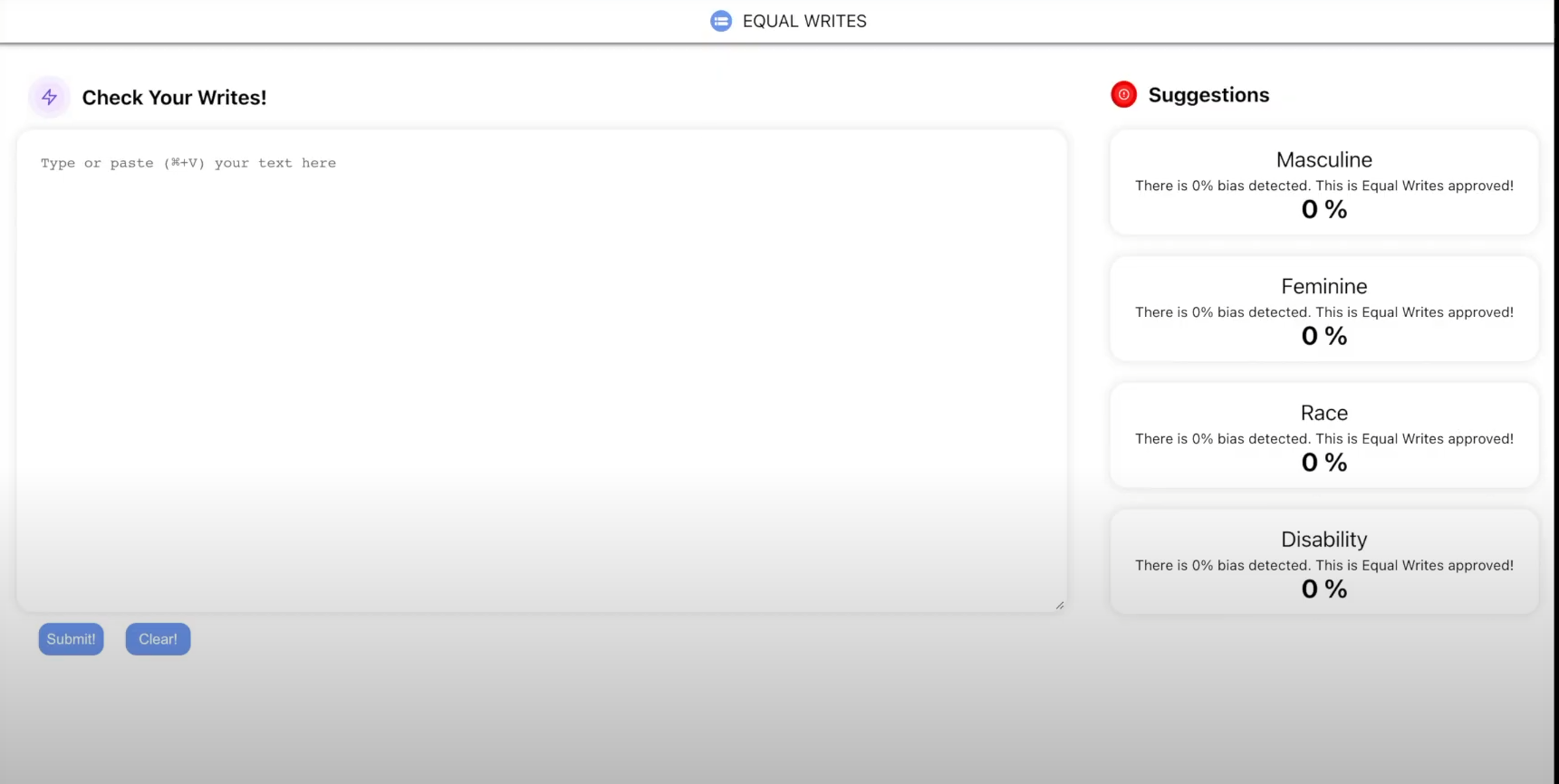

Implicit biases in language and writing results in unequal opportunities in hiring. From job listings to recommendation letters, these biases have been shown to impact hiring decision. Gendered words, racialized terms, and ableist language contribute to sustained inequality. Studies show that gendered/racialized wording in job postings lead to fewer female and minority applicants. They also show that recommendation letters with “feminine” words imply a less qualified applicant in the eyes of recruiters. These biases reinforce workplace inequality, placing female and marginalized applicants at an disadvantage in the job market.